Large Language Model Parameters Explained

When we use any large language model (LLM) we can set a few parameters to customize the behavior of the model. Even though these are utilized in every application that uses a LLM, they are yet largely misunderstood by many people. To understand what they are and how they affect the model let’s first look at how an LLM outputs text.

The Sole task of a LLM is to predict the next word or phrase.

Let’s assume we ask the model a question and let’s assume this question has N number of tokens. Now the model’s task is to predict the N+1th word. To do this model first look at the Nth tokens “Final Hidden State Vector”. Final hidden state vector represents the internal state of the model after processing the entire input sequence up to and including the Nth token.

Now this final hidden state vector is multiplied by the transpose of the embedding matrix used to convert original N input tokens into vectors. This transposed embedding matrix is called the “unembedding matrix”. (The embedding matrix is a matrix of weights that is learned during training).

The output of the above operation is a set of Logits. Logits are essentially just a probability set. In this case the sum of these probabilities don’t add up to 1. So these are called unnormalized log probabilities. Before going into the next step these Logits are normalized so they add up to a 1.

Now the SoftMax function is used to generate a normalized probability distribution for the possible tokens and the output token is chosen based on this probability distribution. For the N+2, this whole process repeats but the calculation will include the newly generated N+1th token. Essentially the N+2 becomes the new N+1.

Now we know how these models output text, let’s see how the main 2 parameters affect the model’s output.

Temperature

This is the most misunderstood parameter of all. Most people think of this parameter as the value that controls the creativity of a model. While that is true, it’s just a byproduct of this parameter.

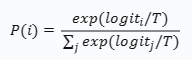

Temperature comes into play In the step where the softmax is calculated. Below is a rough representation of how the softmax function is used in turning logits into probabilities.

In the above equation the T is the temperature value.

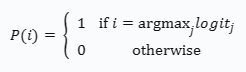

When T is 0, the equation becomes,

This means that the probability of the predicted class is 1 and all other classes have a probability of 0.

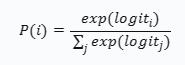

When T is 1, the equation becomes:

The temperature parameter T controls the “softness” of the probability distribution. When T is high, the distribution becomes softer and more uniform. When T is low, the distribution becomes sharper and more peaked.

Simply said, the temperature parameter T controls the “softness” of the probability distribution. When T is high, the distribution becomes softer and more uniform. This means that the model is more likely to generate creative and diverse outputs. When T is low, the distribution becomes sharper and more peaked. This means that the model is more likely to generate outputs that are similar to the training data.

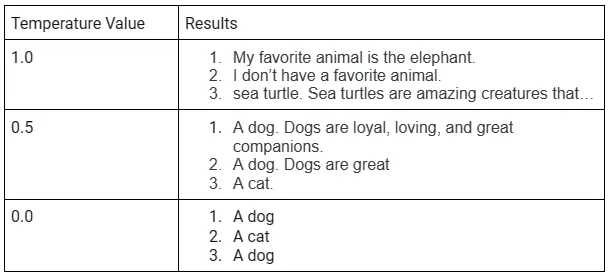

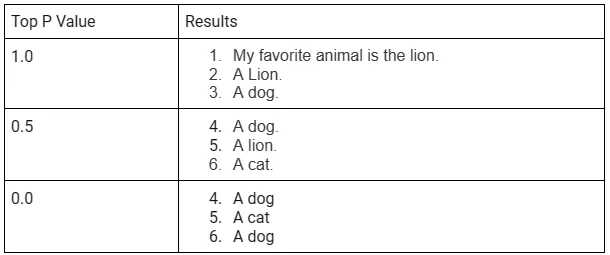

Let’s Look at a few examples of how Temperature affects the model outputs. (All examples use text-davinci-002 Model and the Top P is kept at a 1)

At different temperature values let’s look at how models generate from the same starting text.

Text : “My favorite animal is ”

As we decrease the temperature the outputs become more constant and less detailed but highly focused.

Top P

Top P is a decoding method used to decode the output token from the selected token probabilities.

Let’s assume you set the Top P value as P (0 ≤ P ≤ 1).

Now we have a set of words from the previous step with various probabilities. How Top P works is if it finds the smallest group of words whose cumulative probability exceeds the value of P. This way, the number of words in the set can dynamically increase and decrease according to the next word probability distribution.

If the value of P is 0, then “Top P” will select the word with the highest probability. This is equivalent to greedy decoding.

If the value of P is 1, then “Top P” will select the entire set of words. This is equivalent to sampling from the entire distribution.

For Top P examples, the same text-davinci-002 model is used with a constant temperature of 0.5.

With a higher value of K or P, the LLM has more options to choose from, resulting in more varied output. Conversely, lower values restrict the choices and make the output more focused and coherent.

Conclusion

In conclusion, we now have an explanation of two important parameters that can be used to customize the behavior of large language models (LLMs): Temperature and Top P.

The primary task of an LLM is to predict the next word or phrase based on the input sequence. To achieve this, the model calculates a set of logits representing unnormalized probabilities, which are then normalized using the SoftMax function to generate a probability distribution for the possible tokens. Understanding and appropriately using these parameters can significantly impact the output of large language models, allowing users to tailor the model’s behavior to their specific needs. Temperature and Top P provide valuable tools to strike a balance between creativity and adherence to the training data, enabling LLMs to be more versatile and better suited for various applications.